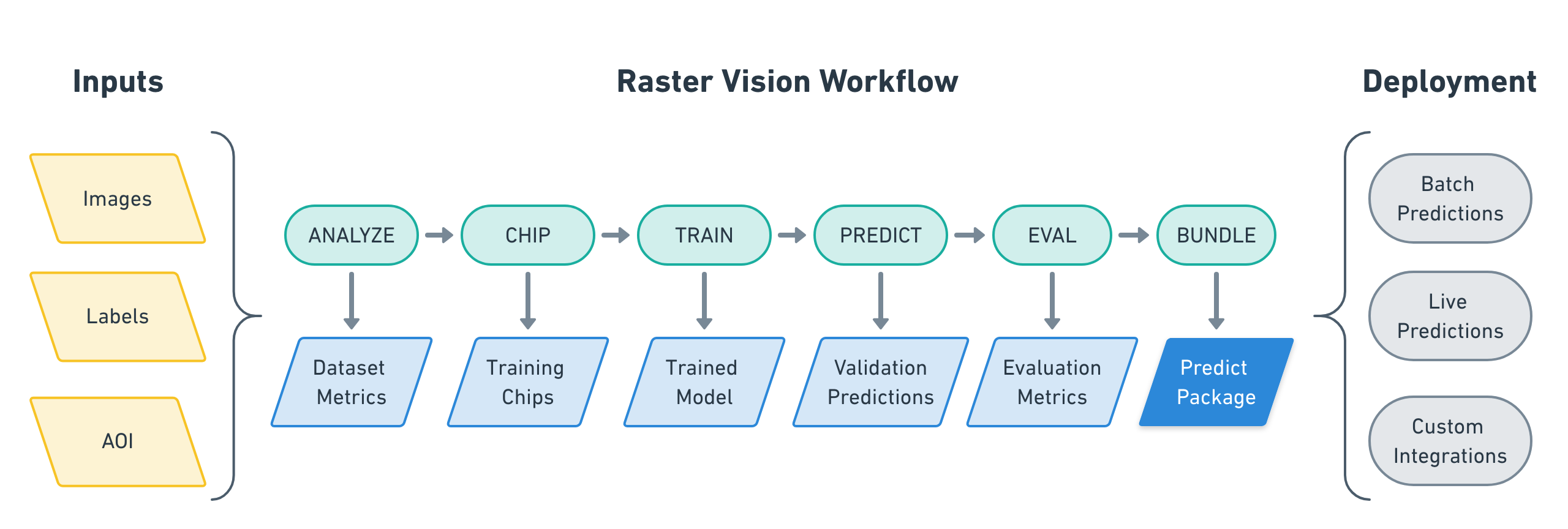

Our open source Raster Vision project makes it easy for teams to build computer vision models to understand and analyze geospatial imagery. Users don’t need to be experts in deep learning to quickly and repeatably configure experiments that execute a machine learning workflow. This workflow includes analyzing training data, creating training chips, training models, creating predictions, evaluating models, and bundling the model files and configuration for easy deployment.

Raster Vision began with our work applying classification and segmentation to aerial and satellite imagery as part of international competitions.

The functionality in Raster Vision can be made accessible through Raster Foundry, Azavea’s product to help users gain insight from geospatial data quickly, repeatably, and at any scale.

To date, Raster Vision has been used to automate inspection of assets with a drone, find center-pivot irrigation systems across an entire state, recognize deforestation in the Amazon, monitor gas pipelines for construction activity, and localize buildings and roads in satellite imagery. Examples of how to use Raster Vision on open datasets can be seen on our examples repo.

Raster Vision is released under an Apache 2.0 open source license and is being actively developed in the open on GitHub.

Header image source: American Aerospace