This Month We Learned (TMWL) is a recurring blog series highlighting what we’ve learned in the last month. It gives us an opportunity to share short stories about what we’ve learned and to highlight the ways we’ve grown and learned both in our jobs and outside of them. This month we learned about multi-stage builds with Docker, PostgreSQL triggers, and variance analysis.

Matt McFarland

Aaron Su

James Santucci

Multi-stage Docker container builds

Matt McFarland

We’ve long since standardized on developing, building, and deploying our applications using container images. Like others, we realized the need to be mindful of the size and contents of the images we build. It’s easy to write a functional Dockerfile which produces images that consist of multiple, unnecessary layers or toolchains that were required to build application components, but are not necessary at runtime.

Some best practices have been encouraged to mitigate these issues, such as combining multiple RUN statements into single statements:

RUN buildDeps=" \

build-essential \

" \

&& apt-get update && apt-get install -y $buildDeps --no-install-recommends \

&& pip install --no-cache-dir -r requirements.txt \

&& apt-get purge -y --auto-remove $buildDeps

Another was to use multiple Dockerfiles in a Builder Pattern, where the output of a “build container” is copied to the host and then back into another container which is ultimately used to execute the build artifact. In concert, these two techniques can effectively reduce the size of the final container image, but it comes at the expense of diminished readability and maintainability.

I recently had the opportunity to use a not-so-new feature of Docker called multi-stage builds. This feature lets you use multiple FROM statements within a single Dockerfile to create several base images, and copy contents between them without an intermediate step on the host. For example, in this (simplified) Dockerfile, we created a container image which contained just the .NET Core runtime, but installs and invokes tools from the .NET Core SDK as an intermediate, ephemeral step to produce our application artifact:

FROM mcr.microsoft.com/dotnet/core/aspnet:3.1-buster-slim AS base EXPOSE 80 FROM mcr.microsoft.com/dotnet/core/sdk:3.1-buster AS build RUN dotnet build "MyApp.csproj" -c Release -o /app/build FROM base AS final COPY --from=build /app/build . ENTRYPOINT ["dotnet", "MyApp.dll"]

You can use the COPY --from=image:tag syntax to copy artifacts from images not contained within the Dockerfile. This allows you to pull in files from utility containers and reduce code duplication.

Ultimately, using multi-stage builds can reduce the final container image size without resorting to the methods listed above. This is especially useful for applications built with languages where the build tooling is much larger than what is needed in the runtime environment. There are a few gotchas to consider: build stages that don’t inherit from a sibling layer won’t share things like ENV or ARG values, potentially forcing you to duplicate some commands. Additionally, if you need to run slow commands like apt update at different stages, you’ll incur that cost multiple times instead of consolidating it on a shared layer. As always, there are trade-offs, but multi-stage builds can be an effective way to reduce complexity in your container based build system.

PostgreSQL triggers

Aaron Su

This month, we launched Groundwork. Groundwork has a filter feature on the project list to get projects with incomplete tasks, so that users can prioritize unfinished ones. We added a task status count column on the projects table to support this filter, because other options, such as listing through a functional Scala filtering pipeline or paginating via SQL table joins, required on-the-fly calculations. Since projects and tasks are in different tables linked by foreign keys, this feature required us to keep the task status count in-sync with every task table operation. We wrote PostgreSQL triggers to ensure that the task status count field is updated no matter where the change originates — e.g. API interactions, database shell sessions, etc.

A PostgreSQL trigger is a function that gets called automatically every time an event on its designated table occurs. Two types of triggers – row-level and statement-level – are provided and can be invoked before or after events. Row-level triggers invoke the function once per affected row, and statement-level triggers invoke the function once per performed statement. We used a row-level trigger after each INSERT, UPDATE, or DELETE on the tasks table.

When triggers invoke a function, we have access to NEW and OLD variables in the function’s scope. These variables contain information about what has changed. The trigger creation looks like the following:

CREATE TRIGGER update_annotation_project_task_summary AFTER INSERT OR UPDATE OR DELETE ON tasks FOR EACH ROW EXECUTE PROCEDURE UPDATE_PROJECT_TASK_SUMMARY();

The function shown below is invoked every time the above defined operations are performed on tasks table. The function finds the annotation_project_id from NEW or OLD variables based on the operation. The operation is available in a variable called TG_OP. Then, for each affected row, the function updates the corresponding project’s task_status_summary column by counting task statuses for the project’s tasks. Finally, we return a value. We returned NULL here since the return value of a row-level trigger fired AFTER is always ignored.

CREATE OR REPLACE FUNCTION UPDATE_PROJECT_TASK_SUMMARY()

RETURNS trigger AS

$BODY$

DECLARE

op_project_id uuid;

BEGIN

-- the NEW variable holds row for INSERT/UPDATE operations

-- the OLD variable holds row for DELETE operations

-- store the annotation project ID

IF TG_OP = 'INSERT' OR TG_OP = 'UPDATE' THEN

op_project_id := NEW.annotation_project_id;

ELSE

op_project_id := OLD.annotation_project_id;

END IF;

-- update task summary for the stored annotation project

UPDATE public.annotation_projects

SET task_status_summary = task_statuses.summary

FROM (

SELECT

CREATE_TASK_SUMMARY(

jsonb_object_agg(

statuses.status,

statuses.status_count

)

) AS summary

FROM (

SELECT status, COUNT(id) AS status_count

FROM public.tasks

WHERE annotation_project_id = op_project_id

GROUP BY status

) statuses

) AS task_statuses

WHERE annotation_projects.id = op_project_id;

-- result is ignored since this is an AFTER trigger

RETURN NULL;

END;

$BODY$

LANGUAGE 'plpgsql';

PostgreSQL official documentation also provides very detailed descriptions and examples for creating triggers and for defining trigger functions.

Variance

James Santucci

This month I learned about analyzing type variance. A group of us are reading Gabriel Volpe’s Practical Functional Programming in Scala, and one topic that came up is where the imap function comes from. This question took us to the cats page on the Invariant typeclass, which helped us realize that no one in the room could explain variance, so I learned it and put together a patat presentation on common typeclasses and variance. You can see my patat at this gist.

Two resources that were very helpful for me were Chapter 3 of Thinking With Types and the Cats library’s Typeclasses documentation.

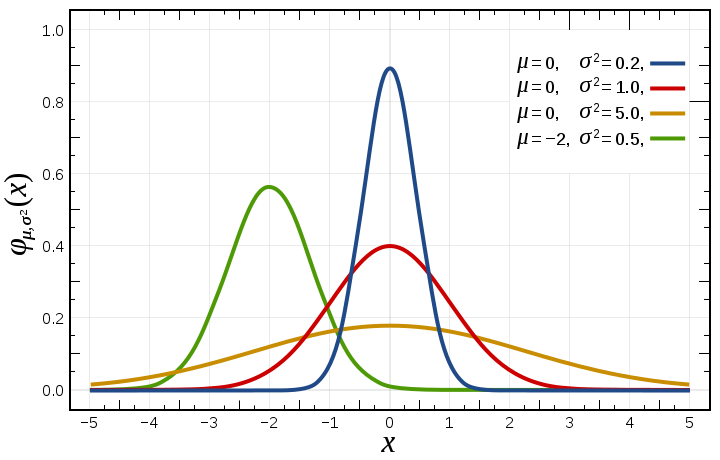

In short, analyzing the variance of a type tells you how you can get from values of one type to values of another. The variance of a type tells us what kinds of functors we can implement for it — covariant, contravariant, or invariant. That’s pretty abstract, so here’s an example in Scala.

Let’s say we have two traits, ExcitedParser[A] and ExcitedPrinter[A]. ExcitedParser[A] knows how to read values of type A from strings, and ExcitedPrinter[A] knows how to turn values of type A into strings followed by exclamation points.

trait ExcitedParser[A] {

def read(s: String): A

}

trait ExcitedPrinter[A] {

def show(a: A): String

def print(a: A) = show(a) ++ "!!!"

}

The parser represents a type String => A. The A is only on the right side of an arrow, so the parser is covariant with A. This means that ExcitedParser should have a map function that lets you transform an ExcitedParser[A] into an ExcitedParser[B] with any function A => B.

The printer represents a type A => String. The A is only on the left side of an arrow, so the parser is contravariant with A. This means that it’s possible to write a contravariant functor, so it should have a contramap function that transforms an ExcitedPrinter[A] into an ExcitedPrinter[B] with any function B => A. This broke my brain roughly the first 17 times I learned it, so to be more concrete, let’s say you have an ExcitedPrinter for Strings:

val stringPrinter: ExcitedPrinter[String] = new ExcitedPrinter[String] {

def show(a: String): String = a

}

It’s trivial to see that Strings can become strings. Let’s say you also have some type GoodColor, which naturally includes only a few colors from city names in Pokemon Red and Pokemon Blue. You also know how to turn any GoodColor into a String.

sealed abstract class GoodColor

case object Cerulean extends GoodColor

case object Viridian extends GoodColor

case object Saffron extends GoodColor

def colorToString(color: GoodColor): String = color match {

case Cerulean => "cerulean"

case Viridian => "vermillion"

case Saffron => "saffron"

}

With this, you can create an ExcitedPrinter[GoodColor] using your ExcitedPrinter[String], since you can turn any GoodColor into a String, and then use the functions from ExcitedPrinter[String] to add exclamation points. That’s what contramap facilitates.

val color: GoodColor = Cerulean val colorPrinter: ExcitedPrinter[GoodColor] = stringPrinter.contramap(colorToString) colorPrinter.print(color) // "cerulean!!!"

Fine, so what’s invariance? Let’s add a new ExcitedCodec[A] trait that requires the functions from the printer and the parser:

trait ExcitedCodec[A] {

def read(s: String): A

def show(a: A): String

def print(a: A) = show(a) ++ "!!!"

}

In this case, the A in the trait’s functions appears on both the right and left sides of arrows. What this means is that we can’t get an ExcitedCodec[B] from an ExcitedCodec[A] unless we can convert A => B and B => A, and that’s exactly what imap, the function required by the Invariant type class, demands:

def imap[A, B](fa: F[A])(f: A => B)(g: B => A): F[B]