Azavea is excited to announce the release candidate for Raster Vision 0.9. Since our first release in October, many people have begun using Raster Vision, and we thank these early adopters for the great feedback they have provided. This release has mostly focused on fixing bugs, making it easier to setup and get started, improving computational performance, and supporting the ability to train models from labels in OpenStreetMap. In this blog post, we will highlight the most interesting changes in 0.9. A full listing can be found in our Change Log.

What is Raster Vision?

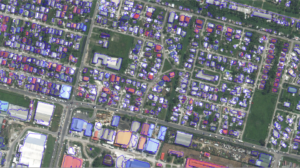

Raster Vision is an open source framework for deep learning on aerial and satellite imagery. It implements a configurable experiment workflow for building chip classification, object detection, and semantic segmentation models on massive, geospatial imagery. The framework is extendable to new data sources, tasks, and backends, and has support for running locally and in the cloud. More information can be found in our documentation.

Improvements in 0.9

Performance

- The

chipandpredictcommands can now be parallelized. To enable this, use the--splits noption torastervision run, which will distribute the work intongroups which each process a subset of the scenes. - There are now distinct CPU and GPU job queues in the AWS Batch configuration, and the

analyze,chip, andevalcommands will run on CPUs to make better use of resources. - The

StatsAnalyzer, which computes imagery statistics, can now be sped up using sub-sampling. For example, to use 5% of the imagery, useStatsAnalyzerConfig.with_sample_prob(0.05). - We now conserve disk space by only storing imagery when it is needed.

Vector Processing

- We have added a new

VectorSourceabstraction, which makes the framework extendable to new sources of vector data. - In addition to a

GeoJSONSource, we have added aVectorTileVectorSourcewhich can read Mapbox vector tiles, either as an.mbtilesfile, or as a{z}/{x}/{y}URI schema. - All vector sources now handle arbitrary geometries by splitting multi-geometries and buffering points and lines into polygons.

- The

class_idsfor features can now be inferred using Mapbox GL filters, using code from Label Maker (thanks!). To infer aclass_idof 1 for features with abuildingproperty, useVectorSourceConfigBuilder.with_class_inference({1: ['has', 'building']}). - Semantic segmentation can now generate polygon output in the form of GeoJSON files, in addition to raster masks, and these are evaluated using the SpaceNet evaluation metric. To generate polygons for class 1 with a denoise radius of 3, use

SemanticSegmentationRasterStoreConfigBuilder.with_vector_output([{'mode': 'polygons', 'class_id': 1, 'denoise': 3}])(Note: this functionality does not work with predict packages yet.)

Other Improvements

- The

GeoTIFFSourceandImageSourceraster sources have been merged intoRasterioSourcewhich can read any imagery that can be opened by Rasterio/GDAL. - Rasters can be shifted by a number of meters to accommodate misalignment between rasters and labels, which often happens when using labels from OSM using

RasterioSourceConfigBuilder.with_shifts(x, y). - There is now an AWS S3

requester_paysoption in the Raster Vision config which allows using requester pays buckets.

Try 0.9

We hope that people will try 0.9, and let us know what they think in our Gitter channel or Github issues. As part of this release candidate, we have tried to make it easier to get started with Raster Vision. We have simplified and standardized our examples repository, so that there is a test option for each experiment, and the location of data is more configurable. To make it easier to setup AWS Batch for use with Raster Vision, we have switched our deployment code from using Terraform to CloudFormation, which is simpler and uses a GUI for configuration.

Note that the QGIS plugin for 0.9 is not available yet, but we plan on releasing it soon.

What’s next?

Here are some of the tasks we are planning for the coming months:

- Improve prediction performance, especially with respect to GPU utilization.

- Implement backward compatibility in a more explicit, systematic way.

- Add support for custom commands for more flexible workflows.

- Add better examples and documentation for creating custom plugins.

- Implement a backend plugin based on fastai and PyTorch which will make it easier to: handle multi-channel imagery, utilize cutting-edge algorithms like mixed-precision training, and implement custom models.