The first lesson we learned when we started applying deep learning techniques to real-world problems was that lying about our results was much easier than telling the truth. Let’s start with a simple example: if you build a computer vision model that detects needles in images of haystacks, and you simply train it to predict “haystack” on 100% of the examples you show it, you’re likely to have built a model with greater than 99% accuracy.

For the purposes of this post, I won’t be focusing on the traditional ways that people lie with statistics–plenty has been written by much smarter people on the subject. Instead, I’d like to focus on the messier aspects of untruths that we’ve stumbled across and fallen victim to–after all, most machine learning applied to real problems is a matter of judgment (not fact), and judgment is messy.

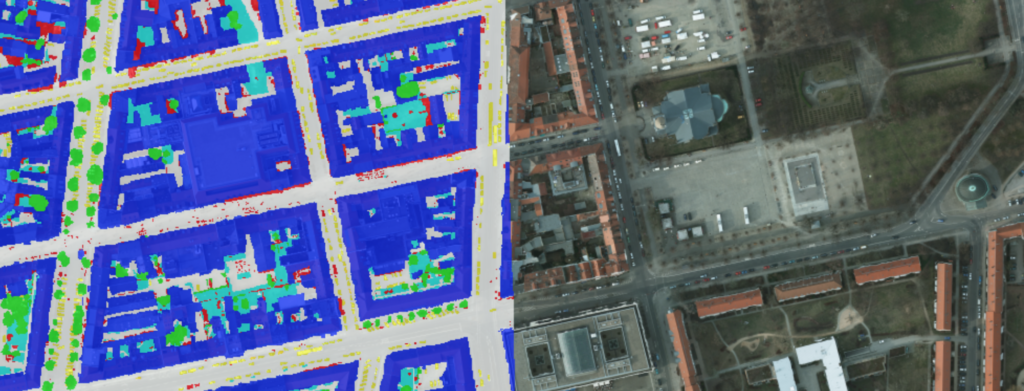

Let’s take the example of a machine learning model we trained to differentiate between six classes found in aerial imagery¹: cars, buildings, trees, low vegetation, impervious surface, and clutter. For expediency, we’ll concentrate on just the least controversial category, “car.” A car is a car, right? Here are a few ways a simple A → B mapping can get…complicated, especially when you measure it as if it were an objective observation.

Cascading categories

Take the case of cars obstructed by tree canopy — each pixel can only have one label, so which category takes precedent? The curators of this dataset chose to always prioritize “tree” above “car” (and building, clutter, impervious surface, etc.) — because this was such a common occurrence throughout the training dataset, the model learned to do just the same:

And so we encounter our first philosophical question: was the model 100% accurate here because it made the same decision as its human counterparts, or only 33% accurate, since it missed 2/3 cars? Here, we’re already starting to see that “accuracy” is a measure of adherence to (someone’s) bias. Sometimes the more interesting question to ask is not “how accurate is this model,” but rather, “how useful is this opinion?”

Tricky tradeoffs

Let’s take a more entertaining example: cars on the roofs of buildings. In one of the models we trained on this dataset, we not only provided it visible imagery, but also an elevation model so it could “see” the height of objects in the imagery. Height data dramatically improved accuracy of the model overall, especially in categories like “tree” and “building”. However, in this particular training dataset exposed rooftop parking was extremely rare, so the model learned that “car” was almost never correlated with a tall height. As a result, it completely missed these very obvious cars on the roof of a parking deck:

If we wanted to account for this edge case, we could proactively look for examples of rooftop cars and add them to our training dataset. But this might be the kind of error we’re willing to live with given the effort involved in finding more examples of this rare case. How do we capture this limitation in a statistical measure? Do we supplement our overall accuracy score with a separate “cars on roofs” score? In my opinion, the best measure of a model’s performance isn’t quantitative at all, it’s simply first-hand experience looking at real results. You might be surprised how great the results of a “bad” (F1 = 0.65) classifier is at picking out damaged buildings in large satellite images or how sloppy the results of a “good” (F1 = 0.90) semantic segmentation model is at extracting building footprints.

Indefinite definitions

What counts as a car? A sedan is a car. A truck is a car. Is a bulldozer a car? According to our training dataset, it is, while a mobile crane is not:

A fun corollary of an ill-defined class is an object that straddles two classes. In this example, we’re looking at a food truck:

Dubious data

Real-world data is imperfect, and whether or not you count predictions made on bad data can have a significant effect on the statistical evaluation of a model. The beauty of deep learning, though, is that it can handle significant variance/noise without getting terribly confused. Take the example of badly warped cars in a stitched-together image mosaic:

Don’t get duped

The next time you’re watching someone present on the results of their machine learning research, or getting sold a new AI-powered product, or reading a suspect blog post from a total stranger…look for some of the red flags that might suggest you’re not seeing the whole picture:

- A single impressive accuracy score is shown with no explanation of the metric being used or why it’s appropriate (e.g. Accuracy, Precision, Recall, F1 Score, Average Precision, Mean Average Precision, Intersection over Union, etc.)

- True positive/negative examples are shown…but no false positive/negative examples are shown

- The pedigree and magnitude of the data used to train the model is never discussed (or is intentionally obfuscated)

If you’re interested in more detail on anything covered in this post, send me an email with the subject line “LIES!” and you’ll surely get my attention.