Late last year, we sold our HunchLab product to Shotspotter. This was a tough decision and was personally bittersweet for me. My first job after graduate school was to help build a crime analysis and mapping unit for the Philadelphia Police Department. Other than Azavea, it was some of the most meaningful work I’ve done in my career. The lessons I learned in the two years I worked for the Philadelphia PD have proved important throughout my career, and one of the ideas I developed became the foundation stones for the first version of HunchLab.

Background

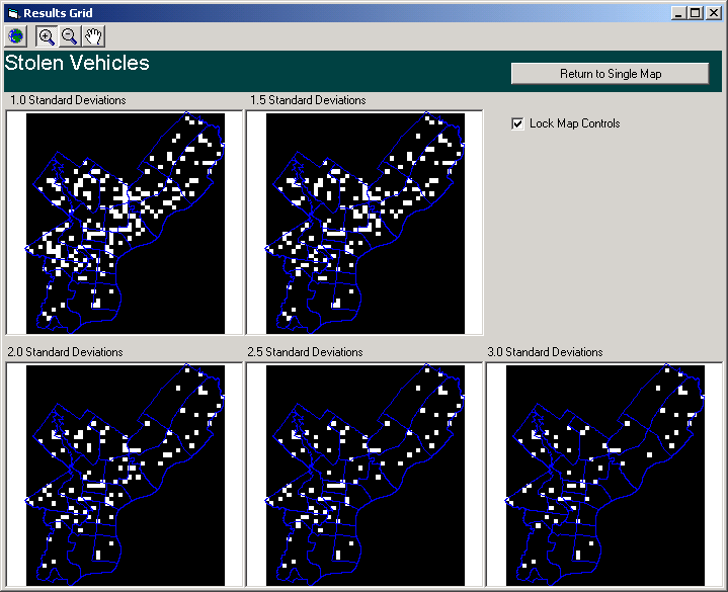

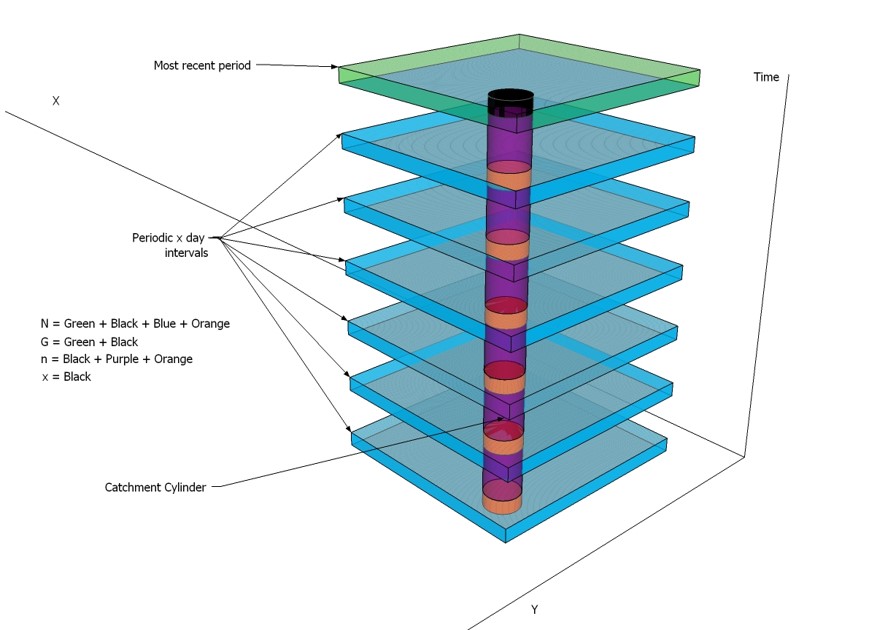

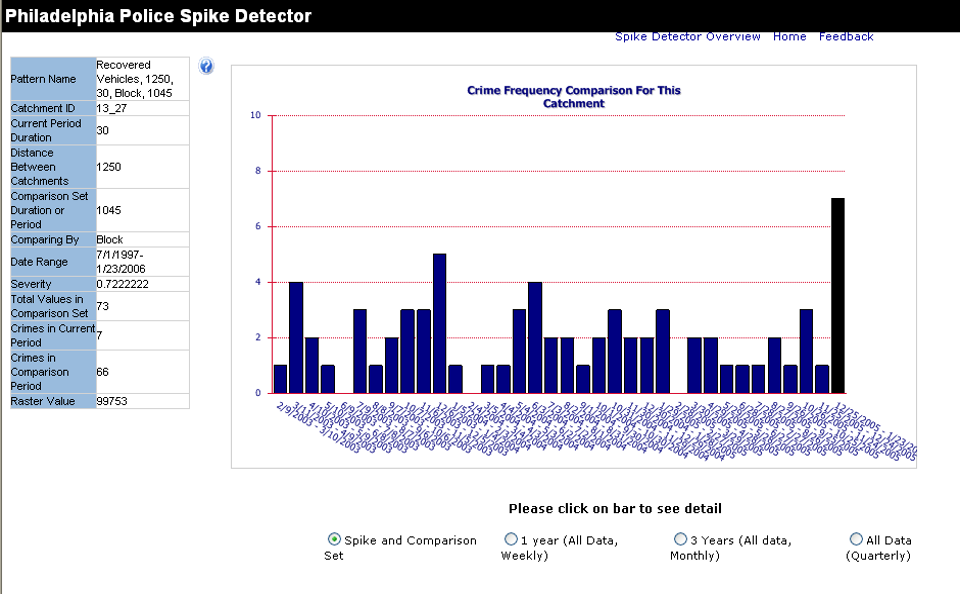

The first version of HunchLab began as an attempt to build a data- and statistics-driven “early warning system” that could scan new crime data on a daily basis, compare it to historical data, identify spikes in crime, and notify people about the changes in a timely manner. I built a prototype when I worked at the Philadelphia PD, wrote and presented a paper about the prototype, and got some critical feedback from some academic colleagues. And then I put it on a shelf.

Six years later, my former boss at the Philadelphia PD, Charlie Brennan, contacted me about potential grant funding to turn this prototype into something more useful that could operate on the web. Using that funding, Azavea built the Crime Spike Detector, a somewhat more advanced prototype. We worked with a spatial statistician, Professor Tony Smith, to improve on the rather naive statistical techniques I had originally used. We built the web application and server-side statistics engine and made it available for two police districts in Philadelphia. We got great feedback from folks that used it and then organized that feedback into a research grant proposal to the National Science Foundation (NSF). The aim of our proposal was to create a more general version that could be used in other cities.

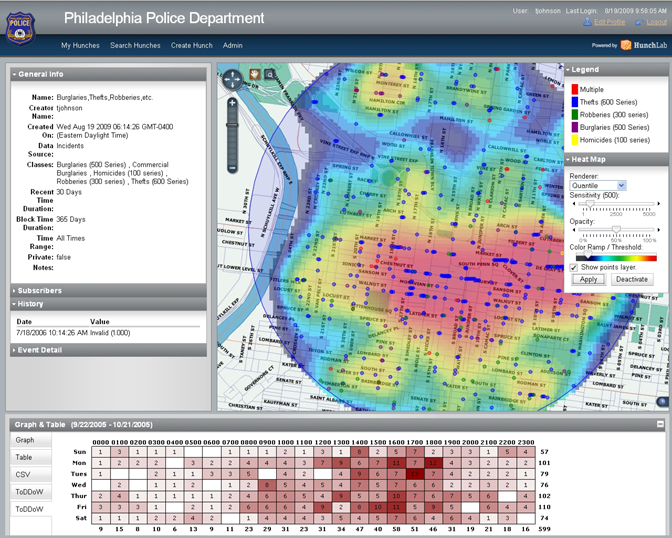

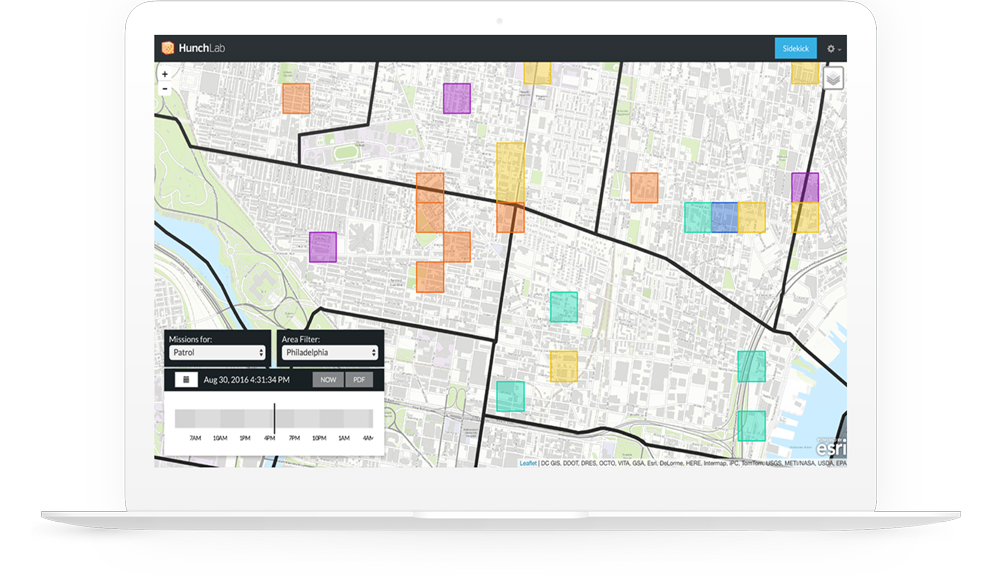

We were fortunate that NSF funded the project, and the funding enabled us to build the first commercial version of HunchLab. This initial effort was used in a few police departments, but it was never a breakout success from a business perspective. However, one of my colleagues, Jeremy Heffner, had an idea for something quite different. Rather than an early warning about crime spikes, he believed we could build a crime risk forecasting system. This was not Minority Report; we could not predict what people would do. But we could use a combination of historical crime data and other data (time of year, day of the week, proximity to bars, lighting, weather, etc.) to generate a forecast of the locations where a crime is somewhat more likely to occur on a given day of the week or time of the day. Given this information on a shift-by-shift basis, we believed that police departments could more effectively carry out neighborhood patrols. We didn’t have any grant funding anymore, so Azavea invested it’s own money (Azavea is not an investor-funded firm, so when we make an investment, it is out of our own cash flow), and the result was HunchLab 2.0. And it worked; we could do a better job of forecasting the likelihood of different crimes occurring than the state of the art.

But by moving from early warning to forecasting, we understood that we were entering a new landscape; we would become part of what some people were starting to call “predictive policing”. While I don’t think the “predictive” part of the moniker is very accurate, the evocative alliteration has made the term sticky, but, it may also sound rather sinister to some people. Over the past several years, an array of incidents have publicly documented violence, civil rights violations, and abuse of power by police officers across the United States. Law enforcement agencies have rightfully come under increasing scrutiny. Further, “predictive policing” tools (license plate readers, facial recognition software, etc.) have been used in some communities to engage in pervasive surveillance of citizens, something that I believe is wrong. We’re a B Corporation with a mission to not only advance the state of the art but also apply it for positive civic, social, and environmental impact. Developing a tool that would support surveillance or violate civil rights was not something I viewed as aligned with our mission.

Mission alignment

So how do we reconcile Azavea’s mission with engagement in a field carrying out activities with which we don’t agree? We began the work with four basic beliefs in mind.

- First, we believe that every citizen has a right to public services that ensure safety to their person and prevent theft of their property. Throughout the world, law enforcement agencies and an independent judiciary have combined to contribute to reductions in crime and social disorder, improving public safety and social justice over several centuries. There is compelling evidence that police patrols can reduce crime by preventing it, and, when a crime does occur, officers can respond more quickly when they are closer to the event. HunchLab can contribute to public safety by allocating police patrols more effectively, patrolling the right places at the right time.

- Second, we believe that too many people are arrested in the United States and that policing and judicial powers have been applied in a manner that has been both biased and destructive to many groups in our society, particularly to minorities and immigrants. Arresting more people is not our goal and should not be the objective of law enforcement agencies.

- Third, we believe that software, machine learning, and pervasive data collection has the potential to generate both great public good and great harm in our communities. We need to design our work to maximize public good and minimize harm.

- Fourth, all data will have bias embedded in its collection, categorization, and use. We cannot eliminate bias, but we must take steps to reduce it.

We wanted to both design and operate software that acknowledged these observations and aim to improve on the status quo. We were convinced that HunchLab could contribute to improving the status quo by using data to overcome bias as well as to track police activity and the results. With the above in mind, we set the following guidelines for ourselves:

- Forecast places, not people: We would forecast locations with the highest likelihood of a crime at a given point in time. We do not attempt to make predictions about the actions of people.

- Limit input data to places, not people: We would not use data about people – no arrests, no social media, no gang status, no criminal background information.

- Reported events: We would generate forecasts based on public reports of crime, not arrests or other data originating in law enforcement activities.

- Supplement reported data: One way to reduce bias is to draw on multiple sources of data. We knew that we could generate forecasts using just the crime reports, but we believed that by supplementing reported crimes with other relevant data, ideally from independent, open sources, we could mitigate bias in the reporting data. Typical examples might include lighting, school schedules, locations of community infrastructure, weather, or locations of bars.

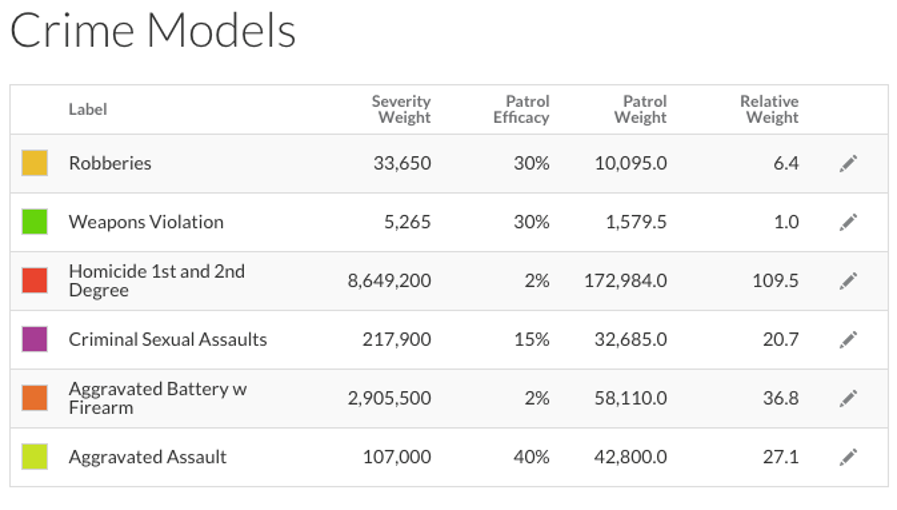

- Design for maximizing the reduction of harm: We would weight the forecasts based on the likely social cost of an event; we would track the amount of time an officer patrols an area in order to prevent over-patrolling; and we would focus on preventable crimes.

- Oversight and accountability: We would log data inputs used and data outputs generated by each model.

- Proactive transparency: We would be committed to engaging with journalists, researchers, and the public to share what we do and how HunchLab works.

The last point was perhaps the most important to me. As Justice Louis Brandeis noted, “Sunlight is said to be the best of disinfectants.” I believe the best mechanism we will have for preventing and mitigating harm is transparency. Companies serving law enforcement agencies and other public institutions often hide behind claims of proprietary algorithms to avoid scrutiny by journalists, researchers, and members of the public. We felt that HunchLab could make a contribution by setting a different standard, one of transparency and public engagement, and we put our shoulders to the wheel in this direction. We have actively supported press and academic articles, filmmakers, funders, and community groups. We developed a Citizen’s Guide, outlining how the software worked. We offered discounts to departments willing to engage in a public discussion of how they were using the software.

In many ways, I think this approach has been successful. All of the police departments with which we have worked have approached use of the product with genuinely positive intentions and goodwill. Further, none have asked to use personal data, arrest data, social media, or other similar data about individuals. Rather, our approach has been welcomed as being significantly different from most companies.

Why sell HunchLab?

There were multiple factors, but the most important was that the product was gaining some traction – we had just won over our biggest customer, the Chicago Police Department, and they were seeing some success with the software. It became clear that HunchLab had a lot of potential, but we also realized that it was going to require a major investment in sales and marketing, and Azavea simply didn’t have the capital to pull it off. We could have taken on investors, but HunchLab was only a small part of Azavea. There may be some exceptions, but I am not generally interested in operating an investor-funded company, and I didn’t want to take on investors for the entire company in order to support a product that only represented a small fraction of the organization. We could have spun HunchLab out into a separate company, but this would have been costly and few investors were interested in this approach. If HunchLab was going to reach its full potential, we would need to find a new home for it.

Over the past year, we prepared prospectus materials, selected an investment banker (Falcon Capital), and began marketing the product. The process culminated in the sale to Shotspotter that closed in October. I couldn’t be happier with the buyer. In our discussions, they seemed to share many of the same observations that I described above, and I think they have both the resources, staff, and experience to enable HunchLab to grow more effectively than would have been the case from within Azavea. ShotSpotter provides software solutions that help under-served communities and law enforcement respond to and reduce gun violence. They do this in a way that respects the privacy of individuals. ShotSpotter’s plans for future development of the HunchLab product (which they have renamed ‘Missions’) will further that purpose, helping police departments more effectively utilize their patrol resources and protect their communities from violent crime. Over the next year, I look forward to working with them to integrate our work with their other offerings.

Other observations

The process of selling HunchLab has driven home a number of lessons for me. First, building any product is hard – the idea is the easy part, but the execution is exceedingly difficult, and building and sustaining a product from within a firm that specializes in professional services is even more challenging. HunchLab was not the first product we built, but it coexisted with custom software projects on the same team, and it was consistently challenging to maintain a steady level of investment in features while also serving other customers. I’m incredibly grateful for the hard work that our software engineers, designers, ops engineers, project managers, and business development colleagues put into making this complex orchestration work on HunchLab as well as our other product investments.

Second, despite all of the advantages of remaining a bootstrap firm, there are limits to growth for a small, privately held firm. In early 2017, we were investing in four SaaS products – HunchLab, OpenTreeMap, Cicero, and Raster Foundry – plus we were investing in two major open source libraries: GeoTrellis and Raster Vision. It was too much, and we were spread too thin. In 2017, we shifted OpenTreeMap to maintenance mode and put new feature development on hiatus. And now we’ve sold HunchLab. My hope is that these two decisions will enable us to put more resources and focus behind fewer product efforts. We have already stepped up investment in our machine learning and computer vision work (Raster Vision) and on integrating it with Raster Foundry, our platform for managing and analyzing Earth observation imagery.

Third, machine learning and artificial intelligence are going to have a profound impact on our society, and the decisions we make in the next decade will affect how we live for the next century or more. We will need to find creative, inventive ways to reconcile our values with the technology we create, and this will represent an enormous intellectual and technical challenge. We will have to develop new norms, new laws, and new institutions, and we will need to do so quickly. I’m encouraged by steps like the Google AI Principles and the Future of Life Institute’s Autonomous Weapons Pledge. Many more such efforts will be necessary in coming years.

Fourth, I am more convinced than ever that some form of algorithmic transparency is going to be critical to balancing the rights of citizens with the potential for public good arising from new technological advances. Software that can affect the course of our lives, whether through law enforcement, human services, education, or other applications should be required to disclose its algorithmic methodology. This type of transparency should apply not only to source code but also to publication of sample data, model parameters, input data, and explanations of how the models work. In some cases, this may require additional technical advances. For example, many of the neural network models widely used in contemporary artificial intelligence applications are notoriously difficult to explain, and new technology may be necessary to bring explainable AI within reach. Nonetheless, as data and software are more widely used to manage our communities, I believe we should expect and demand disclosure of algorithms that affect our lives, particularly for domains that overlap with government functions. I am encouraged by steps such as New York City’s task force for examining the impact of algorithms on civic life. It is a tentative but, nevertheless, important step forward.

So we’ve sold HunchLab, but we haven’t separated ourselves from the challenges it creates or the challenges that our society faces integrating technology that can both deliver help and harm. Are you facing challenges balancing the impact of technology with public good? Azavea remains committed to finding the ways to apply leading edge technology for positive impact.