Azavea is pleased to announce the release of Raster Vision, a new open source framework for deep learning on satellite and aerial imagery.

What is Raster Vision?

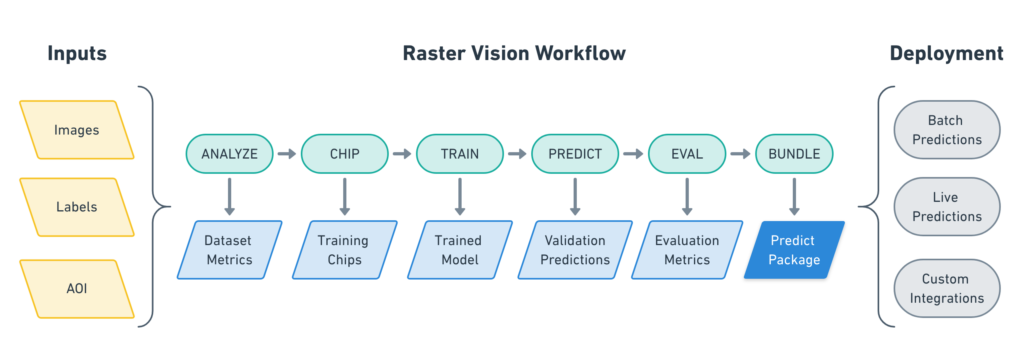

Raster Vision is an open source framework for Python developers building computer vision models on satellite, aerial, and other large imagery sets, including non-georeferenced data like oblique drone imagery. It allows engineers to quickly and repeatably configure experiments that go through core components of a machine learning workflow: analyzing training data, creating training chips, training models, creating predictions, evaluating models, and bundling the model files and configuration for easy deployment.

The input to a Raster Vision workflow is a set of images and training data, optionally with Areas of Interest (AOIs), that describe where the images are labeled. Running a workflow results in evaluation metrics and a packaged model and configuration that enables easy deployment. Raster Vision also supports running multiple experiments at once to find the best model (or models) to deploy.

For more information about what Raster Vision is and how to use it, see our docs at https://docs.rastervision.io

Why did we build it?

In the foundational book “The Cathedral in the Bazaar”, Eric Raymond posits that good open source software often “starts by scratching a developer’s personal itch.” In this case, Raster Vision was created to scratch the itch of a team of developers who were applying deep learning techniques to satellite and aerial imagery. Azavea has been working with deep learning over the past couple of years, starting with identifying tree species based on leaves for a project with the USDA, and moving on to performing a wide range of computer vision tasks on other large imagery. As we began our work, we discovered the rich ecosystem of amazing open source tools around deep learning – project such as TensorFlow and Keras were allowing us to get great results based on pre-existing neural network architectures and pre-trained weights to get us started. However, it felt like we were missing a tool that would help us optimize our ability to handle geospatial imagery, and to quickly take on new projects, prototype new approaches, and get results in a repeatable and comparable manner. Every project started with a new set of data processing scripts to help deep learning libraries deal with large geospatial data, every new iteration of hyperparameters required another version of a codebase (or else the previous changes were lost in history), and every new training run required manually creating AWS GPU instances and remotely connecting to them. I remember a time last year when I saw Lewis with 10 terminals open, ssh’d into 10 different AWS instances, using a tool to type into all 10 terminals at once to kick off running 10 different experiments.

We found ourselves spending a lot of time fumbling around with hacked together scripts made in a frenzy to get to “the good stuff” feeling a lot like this poor guy:

And of course we thought: There has to be a better way!

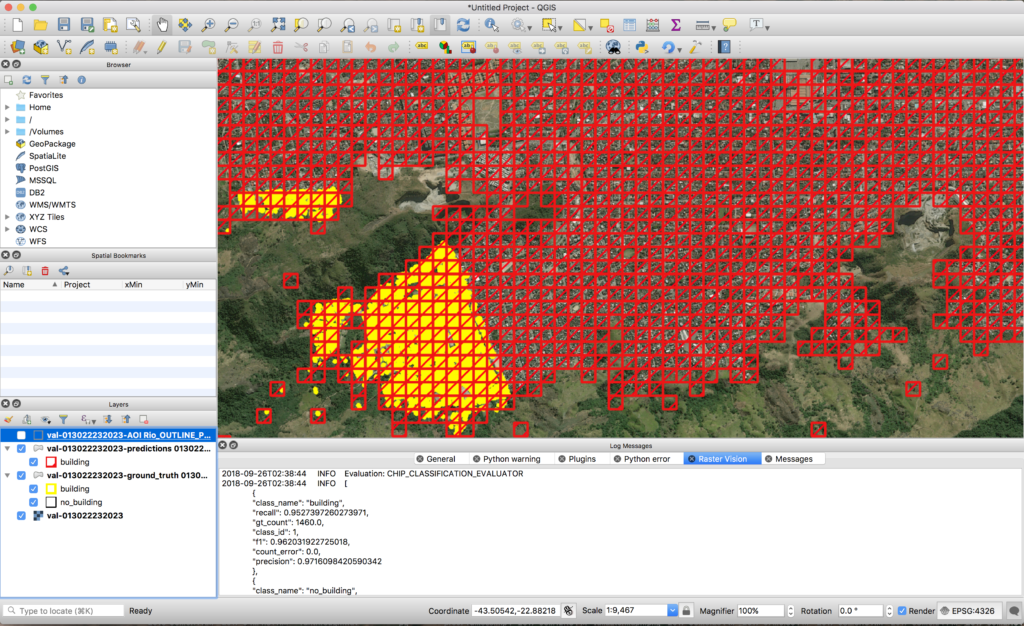

We built Raster Vision because it was the tool we found ourselves constantly wishing we had. And it has certainly scratched that itch, making our team at Azavea more productive than ever. Project startups that used to take a month before Raster Vision now takes days. We no longer have to work hard to prepare data for training – we point Raster Vision at our images and labels in AWS S3 and let it take care of the rest. We’re able to quickly train new models against existing datasets by tweaking a few lines of code and running a command, all on AWS instances we don’t have to ever spin up or down manually. We can run many experiments in parallel and quickly identify effective techniques that we can further iterate on. We can visually evaluate and share results immediately after an experiment finishes through QGIS with the Raster Vision QGIS plugin.

We created Raster Vision to be flexible and extendable, with a plugin system that allows users to write their own backends, tasks, filesystems, and more without having to modify the main codebase. Raster Vision lets us solve our problems once, expose those solutions in the framework, and never have to worry about those problems again.

Why did we open source it?

At Azavea, we believe the best way for a market to advance is when participants driving it are transparent, open and collaborative. We want to cultivate a community of software developers, part-time hackers, AI teams and researchers that will utilize Raster Vision to accelerate their own process, and focus on the ideas that make their work unique, provide value, advance the state of the art, and realize the tremendous potential impact of deep learning in their domain. We also hope that by utilizing Raster Vision, users will be more likely to contribute back to open source, whether it be directly to Raster Vision or in other ways, to the benefit of us all.

Our goal is to make Raster Vision a successful open source project, and a successful open source project requires a community of people using and developing the project with success. We’ve taken what we’ve learned cultivating a community around the GeoTrellis project and are applying those lessons to Raster Vision in order to be good open source citizens from day one. We spent time making our documentation thorough and easy to update. We have a repository of examples that show how to use Raster Vision on open datasets like SpaceNet. There’s a repository that shows how to deploy an AWS Batch setup to run Raster Vision. We’ve got decent test coverage and code coverage reports for every merged pull request. We use Travis for continuous integration, including publishing Docker containers to quay.io for every merge into develop or a release branch. We encourage people to engage with the project, make issues, create Pull Requests, and reach out on our Gitter channel and mailing list.

What’s next?

We’ve released Raster Vision, but the work is far from finished. We plan on adding support for vector tile labels and tile service raster sources in the near future so that Raster Vision can easily consume large vector data such as OSM and imagery endpoints for creating training and validation data. We’re experimenting with different backends, such as Chainer CV and Gluon CV, to add support for even more models. We’re adding other computer vision tasks, such as chip regression (e.g. counting buildings), instance segmentation, and post processing on segmentation to allow for geometric feature extraction. There’s a growing list of features and enhancements that we’d like to make, and would be grateful to hear from the community about your own ideas on how to improve Raster Vision.

If you think that this technology could add value and help meet challenges that your organization faces, but need assistance in order to integrate it, please reach out to us – we provide the expertise, along with the tools like Raster Vision, that help clients integrate machine learning into their own workflows.

Raster Vision represents the culmination of hard work from the Azavea R&D Team and external contributors. Special shoutout to Lewis Fishgold, who is the original author of Raster Vision.